Tuesday, June 10, 2025

Language decoding using fMRI scales with the amount of training data

If I were to give you access to fMRI recordings of brain activity, could you guess what the participant was hearing when this data was being recorded? This task is what decoding is about.

It has been shown in the past that some properties of language can be decoded from fMRI signals. Our current study builds on these results: we show once again that it is possible, to some extent, to decode language from fMRI signals. We improve significantly on past endeavours showing that our models can generalise to unseen stimuli, meaning that the model can infer the content of the sentence from brain recordings, even though the sentence in question was not part of the training data.

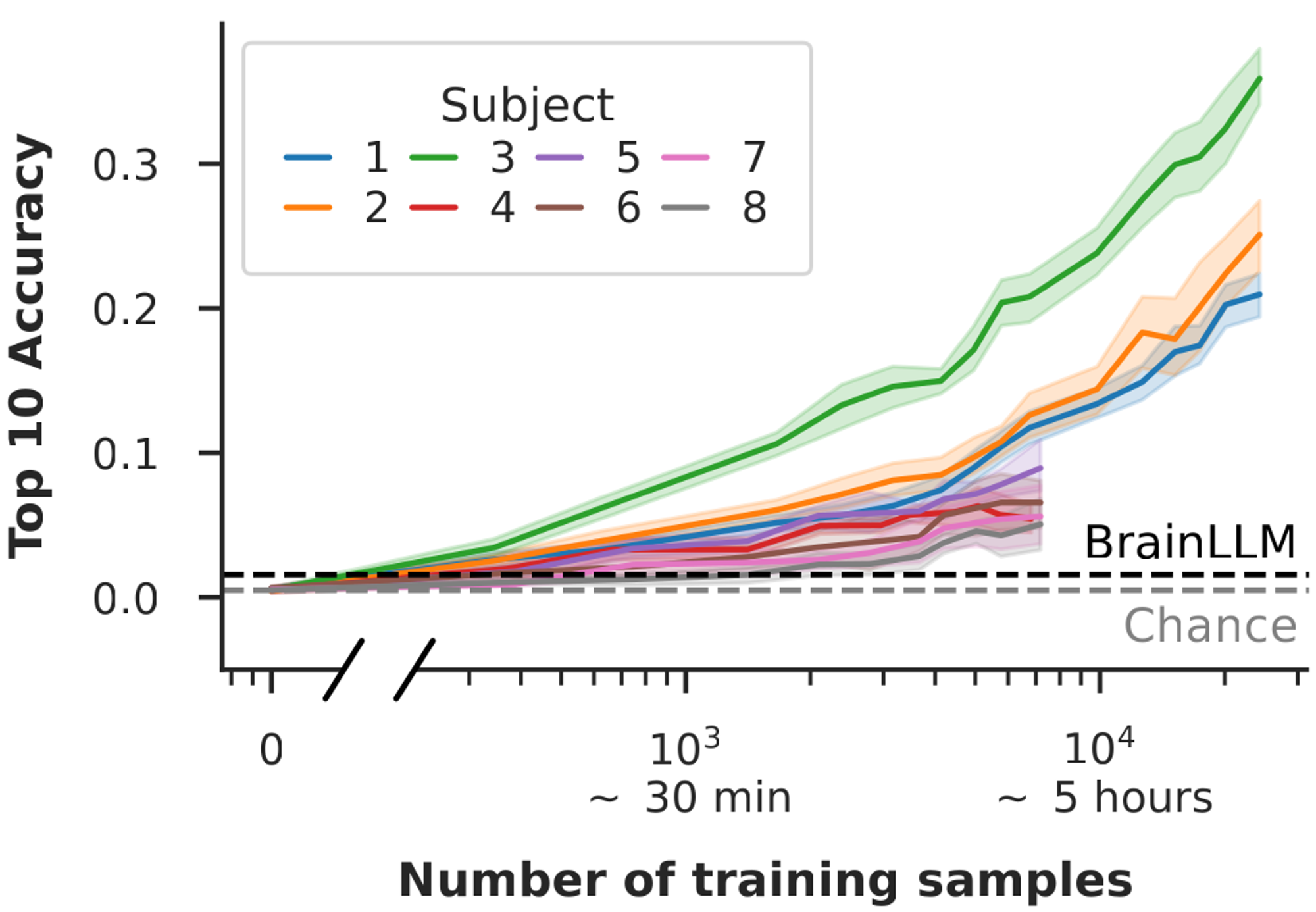

In essence, our models are evaluated on the following task: after they have been trained, they are presented with a set of 2000 unseen sentences. Then, they are given unseen brain signals, and are asked to guess which of the 2000 sentences was being heard when these signals were recorded. The model then ranks all sentences by likeliness. The results reported in the figure below show that, using 13 hours of training data in participant 3, the sentence actually heard by the participant would rank within the first 10 sentences in more than 30% of the time!

Also, we see from these curves that more data in each participant would very likely yield a better model. Consequently, this paper explores what type of additional data should be acquired: in particular, should we scan participants who listen to the same stimuli, or should we foster diversity? Our study concludes that, in the current data regimes, these choices don't impact the quality of models that are trained on data coming from multiple participants. The answer may not be the same with much larger datasets, where more data is collected per participants, and the number of participants increases.

You can freely access our research article online.